The tasks can be scheduled to run on the same machine (Master), or on a different machine (Slave). A master is a installation of Hudson, that can manage one or more slaves. The role of master remains same in Master slave setup. It serves HTTP requests, and it can still build projects on it’s own.

Slaves are computers that are setup to build projects triggered from the master. A separate program called slave agent runs on slave computer. In this article we’ll discuss about how to setup Hudson to executed distributed builds using Master slave. One computer can be configured to execute multiple slave agents.

How it works

When the slaves are registered to a master, the master starts distributing loads to slaves. The delegation depends on the specific job. The job can be configured to either execute on the master, or it can be tied to a specific slave. On the other hand, the jobs can be configured to freely roam between slaves, wherein the job is executed using the free slave. As per the user is concerned, the setup is transparent. The results for all jobs are viewable using the Master, irrespective of the Slave that executed the job.

The slave may be built using any Operating system. The Master slave setup is highly helpful while the user has to execute the job on different Operating system. Consider this use case: The application is expected to run on different operating system, Linux, Solaris and Windows system. To address this need, the user can install Hudson on any machine, say Linux, and add 2 slaves: Solaris and Windows. The user can add 3 different jobs, one running on Master itself and others running on slave machines.

Methods to set up Slave agents

The slave can be launched from Master using any of below methods:

- Launch Slave agents on Unix machines via SSH.

- Launch Slave agents via JNLP.

- Launch Slave agents via execution of command on the master.

- Let Hudson control windows slave as a windows service.

The following screenshot illustrates the list of modes under which the Hudson slave can be launched.

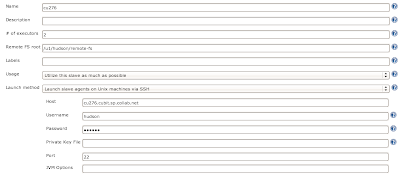

Set up Slave agents on Unix machines via SSH

Hudson has a built-in SSH client implementation that it can use to talk to remote sshd and start a slave agent. This is the most convenient and preferred method for Unix slaves, which normally has sshd out-of-the-box. Click Manage Hudson, then Manage Nodes, then click New Node. In this set up, the connection information is supplied, including SSH host name, user name and authentication credentials. The authentication is performed using password or ssh keys. If it is configured to use ssh keys, the SSH public key should be copied to ~/.ssh/authorized_keys file. Hudson will do the rest of the work by itself, including copying the binary needed for a slave agent, and starting/stopping slaves.

Depending on the project and hardware resource availability, the user Desktop can be used as one of Slave, without affecting his day-to-day activities, thus avoiding the need for dedicated Slaves.

Establish slave agent via Java Web Start

Another way of launching slave is to start a slave agent through Java Web Start (JNLP). In this approach, the user will login to the slave node, open a browser and open the slave page using the URL pointed to the Master. It may look like the following URL:

http://masterserver:port/hudson/jnlpJars/slave.jar

The user is presented with the JNLP launch icon. If user click the icon, the Java Web Start kicks in and it launches a slave agent on this computer.

This mode is convenient when the master cannot initiate a connection to slaves, such as when it runs outside a firewall while the rest of the slaves are in the firewall. The disadvantage is, if the machine with a slave agent goes down, the master has no way of re-launching it on its own.

Set up slave agent headlessly

This launch mode uses a mechanism very similar to Java Web Start, except that it runs without using GUI, making it convenient for an execution as a daemon on Unix. To do this, the user should configure this slave to be a JNLP slave by downloading slave.jar, and then from the slave, run a command like this:

java -jar slave.jar -jnlpUrl http://yourserver:port/computer/slave-name/slave-agent.jnlp

The slave.jar file is downloaded from the above mentioned URL. Make sure to replace slave-name with the name of the slave setup in Master.

By default, Hudson runs on port 8080. It can be installed and managed without the need for super user privilege. The super user privilege is not required to manage both Master and Slave.

The below diagram illustrates the list of configuration parameters specific to a slave.

set up Slave Agent using own scripts

If the above modes is not flexible, the user can write his own script to launch the Slave agent. The script is placed in the Master computer and Hudson runs this script whenever it should connect to the slave. The script may use the remote login program like SSH, RSH to establish connection between Master and slave.

The script would execute the slave agent program like java -jar slave.jar. The stdin and stdout for the script should be connected to the master. For example, the script that does ssh myslave java -jar ~/bin/slave.jar would satisfy this need, when it is executed from the Master web interface. For this reason, running this script manually from the command line does no good.

The copy of slave.jar can be downloaded from the above mentioned URL. Launching the slave agent using this mode requires additional setup in the Slave. The benefit is that when the connection goes bad, the user can use Hudson web interface to re-establish the connection.